This week I published a research blog post with Databricks on how we shift the Pareto frontier of enterprise agents using automated prompt optimization. There are four highlights I’m especially excited about:

1. Open source beats frontier models

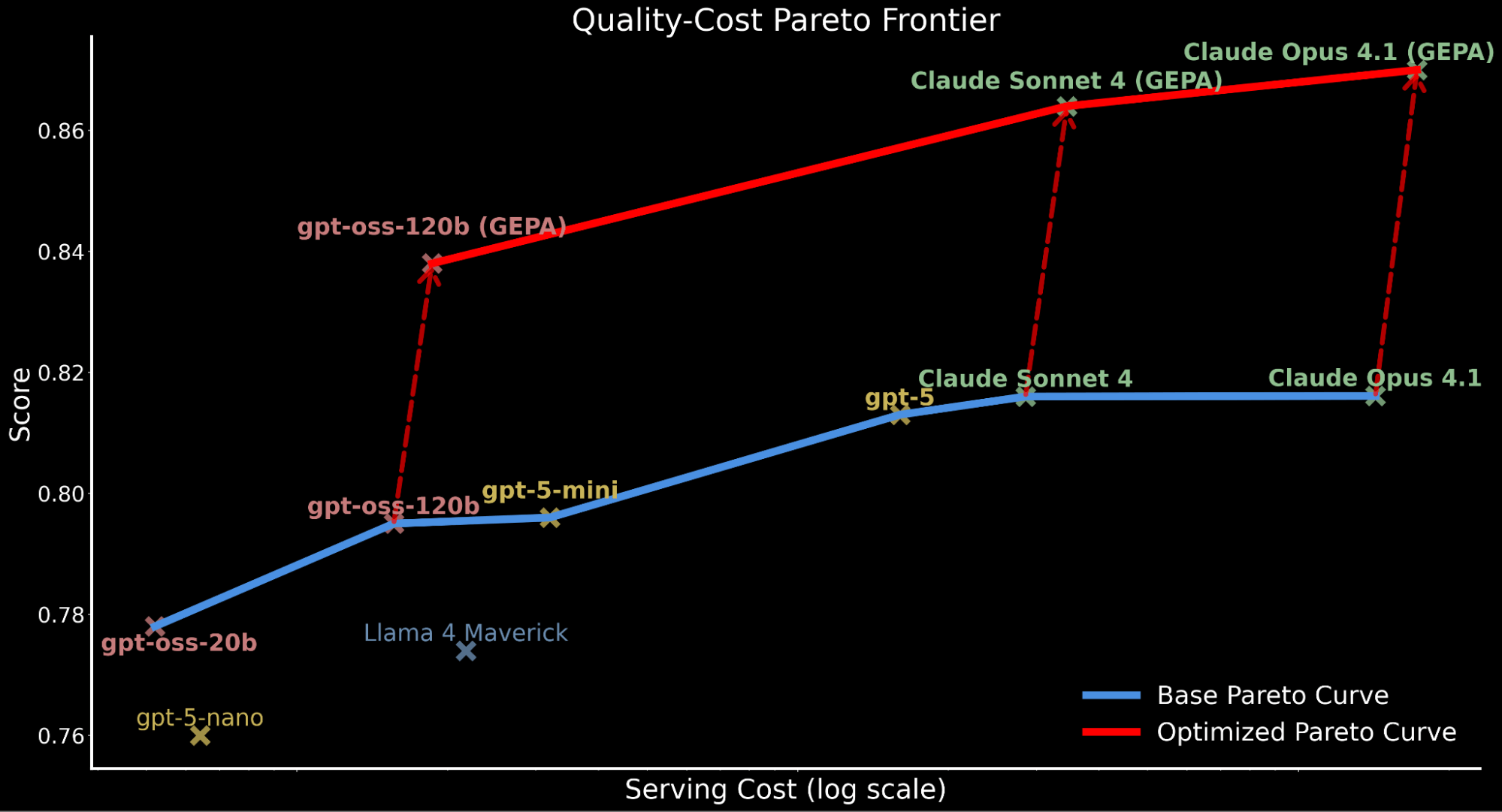

We improved gpt-oss-120b to surpass GPT-5 and Claude Opus 4.1 on enterprise-scale information extraction. Optimized with GEPA (a new technique from Databricks + UC Berkeley), gpt-oss-120b outperforms Opus 4.1 by +3% while being 90× cheaper to serve. GEPA combines natural language reflection (to capture high-level task rules) with evolutionary search (to explore optimal prompts), and the results are remarkable.

Applying automated prompt optimization shifts the entire Pareto curve upwards

2. GEPA generalizes to frontier models

The same technique boosts Claude Sonnet 4 and Claude Opus 4.1, pushing them to new state-of-the-art performance. This shows how powerful and generalizable automated prompt optimization can be across model families.

We observe a consistent trend of automated prompt optimization delivering substantial performance gains across all models’ baseline performance.

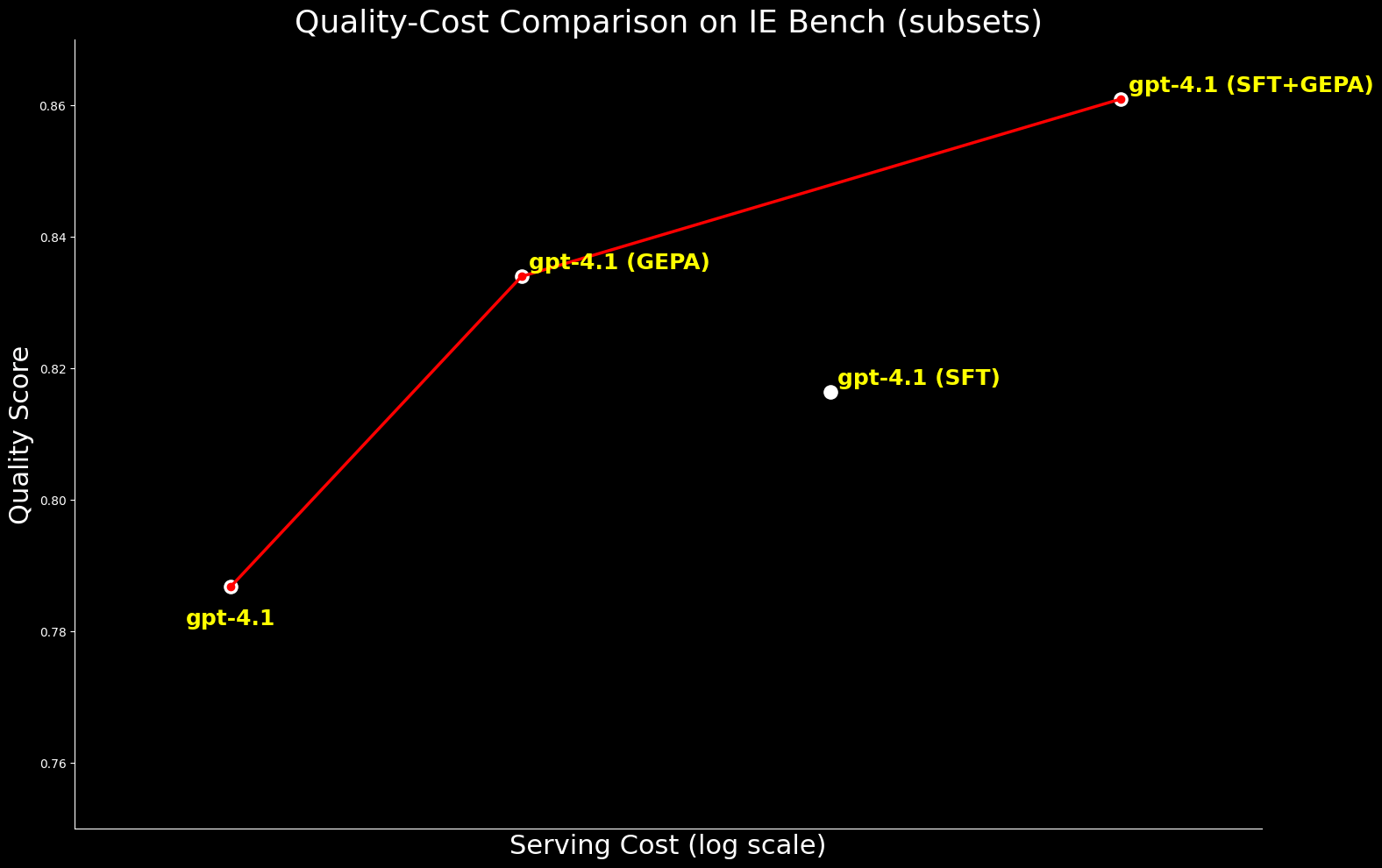

3. GEPA vs. SFT (and Better Together)

We compared GEPA with supervised fine-tuning (SFT) and found that GEPA can surpass SFT while being 20% cheaper to serve. Even better, combining both methods produced the highest performance — consistent with the findings from the prior research Better Together.

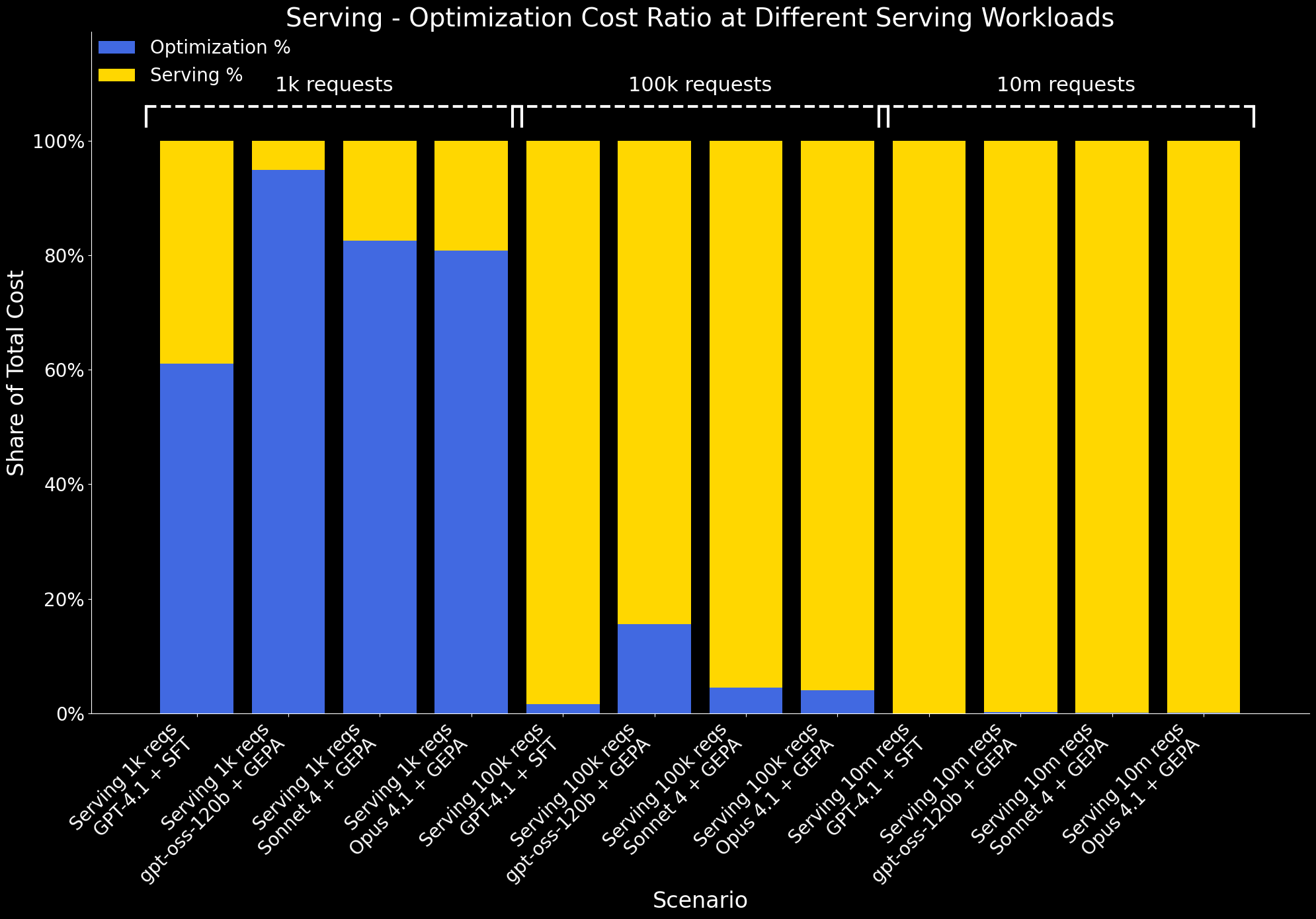

4. Lifetime cost analysis

We calculated the full cost of optimization + serving at scale. GEPA + gpt-oss-120b delivers orders of magnitude lower lifetime cost than SFT on GPT-4.1 or GEPA on Sonnet 4. Once serving volume reaches 100k+ requests, the one-time optimization overhead is negligible — meaning the benefits of higher accuracy and dramatically lower serving cost far outweigh the initial cost.

For these reasons, we see GEPA + gpt-oss-120b as an extremely competitive option for enterprise deployments.

I highly recommend reading the full blog here: https://www.databricks.com/blog/building-state-art-enterprise-agents-90x-cheaper-automated-prompt-optimization.

A huge thank you to Matei Zaharia (Databricks CTO) and Erich Elsen (my tech lead) for their thoughtful feedback during review. Their honest suggestions led to more thorough experiments and a better structured post. Special thanks to Matei for actively sharing this blog, which helped amplify it on social media. I truly value the collaboration on this project and in our day-to-day work.

There’s a lot of exciting research happening at Databricks right now. We’re exploring the new paradigm of AI agents, grounded in real enterprise challenges, and pushing on both quality and cost efficiency. We’ve already published on RLVR, ALHF, and TAO, and I look forward to sharing more breakthroughs soon!